Optical setup and data acquisition

The Multi-SIM system was built on the basis of an invented fluorescence microscope (Ti2E, Nikon). Briefly, three laser beams of 488 nm (Genesis-MXSLM, Coherent), 560 nm (2RU-VFL-P-500-560, MPB Communications) and 640 nm (LBX-640-500, Oxxius) were combined collinearly and then passed through an acousto-optic tunable filter (AOTF, AOTFnC400.650, AA Quanta Tech). Afterward, the selected laser light was expanded and sent into an illumination modulator, which was composed of a ferroelectric spatial light modulator (SLM, QXGA-3DM, Forth Dimension Display), a polarization beam splitter and an achromatic half-wave plate. Different illumination modes were generated by adjusting the patterns displayed on the SLM, for example, grating patterns of 3 phases × 3 orientations at 1.41 numerical aperture (NA) for TIRF-SIM or 1.35 NA for GI-SIM. Next, the modulated light was passed through a polarization rotator consisting of a liquid crystal cell (Meadowlark, LRC-200) and a quarter-wave plate, which rotated the linear polarization to maintain the necessary S-polarization, thus maximizing the pattern contrast for all pattern orientations. The diffraction orders, except for ±1 orders for TIRF/GI-SIM, were filtered out by a spatial mask and then relayed onto the back focal plane of the objectives (1.49 NA, Nikon). The raw SIM images excited by different illumination patterns were sequentially collected by the same objective, then separated by a dichroic beam splitter (Chroma, ZT405/488/560/ 647tpc) and finally captured with an scientific complementary metal–oxide–semiconductor camera (Hamamatsu, Orca Flash 4.0 v3). We separately optimized the illumination pattern for each color channel and SIM reconstruction algorithm according to the objective NA and corresponding excitation wavelength, resulting in superior imaging quality for all channels in multicolor imaging. The Multi-SIM system integrates diverse imaging modes (TIRF/GI, TIRF/GI-SIM, three-dimensional (3D)-SIM, stacked slice-SIM, etc.) into a single setup, which is commercially available from NanoInsights.

In the BioTISR dataset, for each type of specimen and each imaging modality, we acquired the raw data from at least 50 distinct regions of interest (ROIs). For each ROI, we acquired 2–4 groups of raw images with N phases × M orientations × T time points with a constant exposure time but escalating excitation light intensity, where (N, M, T) corresponded to (3, 3, 20) for TIRF-SIM and GI-SIM, (5, 5, 10) for nonlinear SIM and (3, 5, 10) for 3D-SIM. Each set of N × M × T raw images were averaged as diffraction-limited time-lapse WF images or stacks, which were then used as the input LR image for TISR networks. Meanwhile, the raw images acquired with the highest excitation power were reconstructed into GT-SIM images or stacks, which served as the targets in model training. For better clarity, we summarize the imaging conditions and detailed information of the BioTISR dataset in Supplementary Table 1.

In long-term live-cell imaging experiments, cells were held in a stage-top incubator equipped on the microscope (OkO Lab, H301) to maintain conditions at 37 °C with 5% CO2. We used the TIRF/GI mode and GI-SIM mode to acquire the WF or raw SIM images (which could be reconstructed into conventional SR-SIM images for comparison) and then obtained SR images using the DPA-TISR or Bayesian DPA-TISR models. The detailed imaging conditions of live-cell imaging experiments are summarized in Supplementary Table 2.

Network configurations for TISR model evaluation

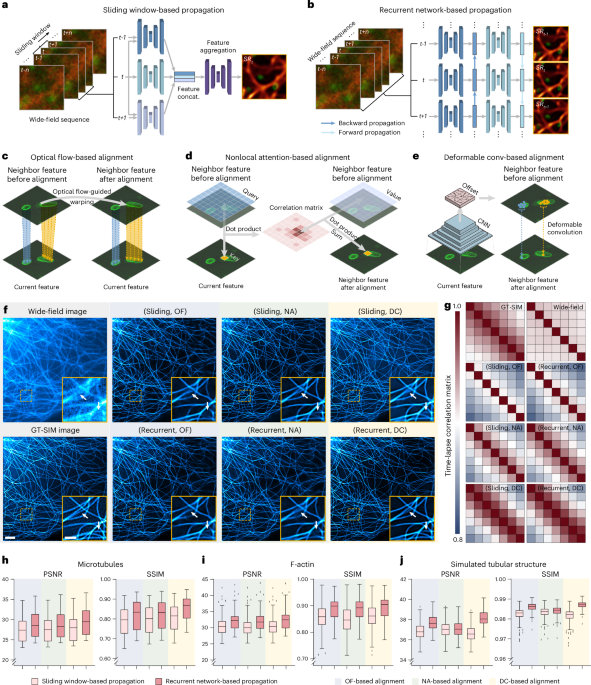

For fair comparison, we designed a template TISR model with the propagation and alignment modules replaceable, as depicted in Extended Data Fig. 2. The template TISR model was modified from the foundational BasicVSR++ neural network21. It comprises three primary modules: a feature extraction module, a propagation and alignment module and a reconstruction module. This architecture is meticulously crafted to be compatible with distinct components of the computational process. For a given image sequence X = {xi−k, xi−k+1, …, xi, …, xi+k−1, xi+k}, three identical residual blocks are used to extract features from each frame. Each residual block comprises two convolutional layers, a leaky rectified linear unit activation function and a skip connection. Before passing through the residual blocks, a convolutional layer is applied for channel augmentation. The operation of feature extraction module can be formulated as

$${x}^{1}={\rm{FE}}\left(x\right)=f\left(x\right)+{{\rm{RB}}}^{\left(3\right)}\left(\,f\left(x\right)\right),{x}\in X$$

(3)

where f(∙) is a convolutional layer and RB is a residual block.

$${{\rm{RB}}}^{\left(3\right)}\left(x\right)={\rm{RB}}\left({\rm{RB}}\left({\rm{RB}}\left(x\right)\right)\right)$$

(4)

Subsequently, the output feature maps are fed into the propagation and alignment module through two distinct schemes, referred to as SWP and RNP.

In the SWP scheme, feature maps from the central and adjacent frames within a local window are severally fed into an alignment block to refine all neighbor features towards the central frame. The alignment block consists of an alignment module, a convolutional layer and three residual blocks. In our validation, three distinct alignment modules based on OF, DC and NA are used (Extended Data Fig. 2c–e). Afterward, the resulting feature maps are concatenated and go through a 1 × 1 convolutional layer to reduce the channels. The progress of SWP can be articulated as follows:

$$\begin{array}{l}{x}_{i}^{2}\,=\,{\rm{SWP}}\left({x}_{i-k}^{1},\ldots ,{x}_{i}^{1},\ldots ,{x}_{i+k}^{1}\right)\\\,\quad\;=\,f\left({\rm{AB}}\left({x}_{i-k}^{1},\,{x}_{i}^{1}\right)\oplus \ldots \oplus {x}_{i}^{1}\oplus \ldots \oplus {\rm{AB}}\left({x}_{i+k}^{1},\,{x}_{i}^{1}\right)\right)\end{array}$$

(5)

where \(\oplus\) denotes channel-wise concatenation and AB is an alignment block.

In the RNP scheme, the feature maps from adjacent frames generated by the feature extraction module propagate forward and backward in the time dimension following the second-order grid propagation manner21. Given the feature map \({x}_{i}^{1}\) and corresponding features propagated from the first-order neighborhood (hi+1,hi−1) and second-order neighborhood (hi+2,hi−2), we have

$$\begin{array}{l}{g}_{i}^{\,\rm{backward}}\,=\,{{\rm{RB}}}^{\left(3\right)}\left(\;f\left({\rm{AB}}\left({h}_{i+2},{h}_{i+1},{x}_{i}^{1}\right)\oplus {x}_{i}^{1}\right)\right)\\\qquad\;\;\,{x}_{i}^{2}\,=\,{g}_{i}^{\rm{forward}}={\rm{RNP}}\left({x}_{i-k}^{1},\ldots ,{x}_{i}^{1},\ldots ,{x}_{i+k}^{1}\right)\\\,\qquad\qquad=\,{{\rm{RB}}}^{\left(3\right)}\left(f\left({\rm{AB}}\left({h}_{i-2},{h}_{i-1},{x}_{i}^{1}\right)\oplus {g}_{i}^{\,\rm{backward}}\oplus {x}_{i}^{1}\right)\right)\end{array}$$

(6)

where \({g}_{i}^{\,\rm{backward}}\) and \({g}_{i}^{\rm{forward}}\) represent the feature computed at the ith timestep in backward and forward propagation, respectively.

In the OF-based alignment block, we compute the OF between neighbor frames using SPyNet46. If we denote the OF from \({x}_{i-k}\) to xi as \({f}_{i-k\to i}\), the neighbor features \({h}_{i-k}\) will be aligned according to \({x}_{i}\) by directly warping using the OF computed from \({x}_{i-k}\) and xi:

$${\bar{h}}_{i-k}^{\rm{OF}}=W\left({h}_{i-k},\,{f}_{i-k\to i}\right)$$

(7)

where W denotes the spatial warping operation.

In DC-based alignment block, the OF \({f}_{i-k\to i}\) is used to prealign the features. The aligned features are then concatenated with the current features \({x}_{i}^{1}\) and OF \({f}_{i-k\to i}\) to compute the DC offset \({o}_{i-k\to i}\) and modulation mask \({m}_{i-k\to i}\) with two sequential residual blocks:

$${o}_{i-k\to i}\,=\,{f}_{i-k\to i}+{{\rm{RB}}}^{\left(2\right)}\left({\bar{h}}_{i-k}^{\rm{OF}}\oplus {x}_{i}^{1}\oplus \,{f}_{i-k\to i}\right)$$

(8)

$${m}_{i-k\to i}\,=\sigma \left({{\rm{RB}}}^{\left(2\right)}\left({\bar{h}}_{i-k}^{\rm{OF}}\oplus {x}_{i}^{1}\oplus {f}_{i-k\to i}\right)\right)$$

(9)

where σ denotes the sigmoid activation function. A DC layer is then applied to the unwrapped feature \({h}_{i-k}\):

$${\bar{h}}_{i-k}^{\rm{DC}}=D\left({h}_{i-k}{{;}}{o}_{i-k\to i},{m}_{i-k\to i}\right)$$

(10)

where D denotes a DC.

In NA-based alignment block, three fully connected layers are initially used to generate the embedded query, key and value:

$$\begin{array}{ccc}{h}_{i-k}^{\rm{Query}} & = & F\left({h}_{i}\right)\\ {h}_{i-k}^{\rm{Key}} & = & F\left({h}_{i-k}\right)\\ {h}_{i-k}^{\rm{Value}} & = & F\left({h}_{i-k}\right)\end{array}$$

(11)

where F denotes a fully connected layer. Then, the NA mechanism is applied for feature alignment:

$${\bar{h}}_{i-k}^{\rm{NA}}=F\left({\rm{Softmax}}\left({h}_{i-k}^{\rm{Query}}{\rm{\otimes }}{h}_{i-k}^{\rm{Key}}\right){\rm{\otimes }}{h}_{i-k}^{\rm{Value}}\right)$$

(12)

where \({\rm{\otimes }}\) refers to dot product operation. In practice, the embedded features have half of the channels compared to the original feature and we adopt a neighborhood attention mechanism47, which can be regarded as a more efficient implementation of NA in our experiments, achieving similar SR performance.

Lastly, the aligned hierarchical features undergo through the reconstruction module consisting of three residual blocks, a pixel shuffle layer and a concluding convolutional layer to generate the SR residuals, which are then added up with upsampled raw input images, yielding final TISR images. The operation of the reconstruction module can be formulated as follows:

$${x}_{i}^{3}\,=\,f\left({\rm{PixelShuffle}}\left(\,{{\rm{RB}}}^{\left(3\right)}\left({x}_{i}^{2}\right)\right)\right)+{\rm{Upsample}}\left({x}_{i}\right)$$

(13)

Taking the final output of RM as \({\hat{y}}_{i}\) and the GT as yi for the ith frame, the overall objective function can be formulated as

$${\mathscr{L}}\left({\left\{{\hat{y}}_{i}\right\}}_{i=1}^{n},{\left\{\,{y}_{i}\right\}}_{i=1}^{n}\right)=\frac{1}{n}\mathop{\sum }\limits_{i=1}^{n}\,\left|\,{\hat{y}}_{i}\,-\,{y}_{i}\right|$$

(14)

The trainable parameter numbers of TISR models constructed as described are listed as follows: 5.58 million (sliding, OF), 5.59 million (sliding, NA), 7.97 million (sliding, DC), 3.95 million (recurrent, OF), 3.71 million (recurrent, NA) and 5.40 million (recurrent, DC).

Network architecture of DPA-TISR

DPA-TISR was constructed on the basis of the optimal TISR baseline model (that is, adopting recurrent network for propagation and DC for alignment), following the evaluation conclusions in Fig. 1. In DPA, as depicted in Fig. 2a and Extended Data Fig. 4b, the phase-space alignment mechanism serves as a complementary module to spatial DC, synergistically improving the alignment between neighborhood feature maps \({h}_{i-k}\) and current feature maps hi. Specifically, the DPA begins with the real-valued fast Fourier transform (denoted as FFT(∙), implemented with torch.fft.rfft) to extract the amplitude and phase of both \({h}_{i-k}\) and hi:

$$\begin{array}{ccc}{h}_{i}^{\rm{phase}} & = & {\rm{Angle}}({\rm{FFT}}({h}_{i}))\\ {h}_{i-k}^{\rm{phase}} & = & {\rm{Angle}}({\rm{FFT}}({h}_{i-k}))\\ {h}_{i-k}^{\rm{amplitude}} & = & {\rm{Abs}}\left({\rm{FFT}}\left({h}_{i-k}\right)\right)\end{array}$$

(15)

where \({\rm{Angle}}(\bullet )\) and \({\rm{Abs}}(\bullet )\) represent the operation to obtain the element-wise angle and absolute value of the features. Then. the concatenation of phases from current and neighborhood feature maps further goes through a phase-space convolution module, containing one convolutional layer followed by two residual blocks and a skip connection:

$$\begin{array}{ccc}\delta \left({h}_{i-k}^{\rm{phase}},{h}_{i}^{\rm{phase}}\right) & = & {{{\rm{RB}}}}^{\left(2\right)}(\,f(p))+{h}_{i-k}^{\rm{phase}}\\ p & = & {h}_{i}^{\rm{phase}}\oplus {h}_{i-k}^{\rm{phase}}\end{array}$$

(16)

An inverse real-valued FFT (denoted as iFFT(∙), implemented with torch.fft.irfft) is then used to reconstruct the space-domain feature maps from the obtained amplitude and phase components:

$${h}_{i-k}^{\rm{refined}}={\rm{iFFT}}\left({h}_{i-k}^{\rm{amplitude}}\times {e}^{i\times \delta \left({h}_{i-k}^{\rm{phase}},{h}_{i}^{\rm{phase}}\right)}\right)$$

(17)

The phase-refined feature maps are then fed into the DC module as depicted in Extended Data Fig. 2 for subsequent spatial alignment. The overall architecture of DPA-TISR is illustrated in Extended Data Fig. 4.

To adapt DPA-TISR to SIM reconstruction and volumetric SR inference, we further modified it into DPA-TISR-SIM and 3D DPA-TISR, respectively. The primary modifications are depicted in Supplementary Fig. 12 and described in detail in Supplementary Note 5.

Assessment metric calculation

We performed image normalization for GT-SIM and TISR images following a commonly used procedure5,11. Specifically, each GT-SIM image stack Y was normalized by dividing by the maximum value, then blurred by a 3 × 3 size Gaussian kernel with the s.d. = 0.4 (denoted as ρ(∙)) to mitigate the SIM reconstruction artifacts:

$${\rm{Norm}}\left({{Y}}\,\right)=\rho \left(\frac{{{Y}}}{\max \left({{Y}}\,\right)}\right)$$

(18)

Before computing the assessment metrics (that is, PSNR and SSIM), a linear transformation was applied to each SR image stack H:

$${{\rm{Trans}}}_{{\rm{linear}}}\left({{H}}\,\right)=\alpha {{H}}+\beta$$

(19)

where \({\rm{\alpha }}\) and \({\rm{\beta }}\) were chosen by solving the convex optimization problem:

$$\mathop{{\rm{arg}}\max }\limits_{\alpha ,\beta }{\left|\left|\alpha {{H}}+\beta -{\rm{Norm}}\left({{Y}}\,\right)\right|\right|}_{2}$$

(20)

where \({{||}\bullet {||}}_{2}\) denotes L2 normalization. The optimized \(\alpha\) and \(\beta\) result in an MSE-minimized linear transformation of \({{H}}\), effectively scaling and translating every pixel to match the dynamic range of the GT.

Three types of metrics were used for quantitatively evaluating the performance in output fidelity, resolution and temporal consistency. PSNR and SSIM were used to evaluate pixel-level similarity between the inferred SR images and GT-SIM images. Decorrelation analysis48 was applied to quantify the image resolution. For temporal consistency assessment, a time-lapse Pearson’s correlation matrix was used to visualize the similarity between adjacent SR images \({\{{H}_{i}\}}_{i=1}^{n}\). The Pearson correlation between images x and y is calculated by

$${\rm{Corr}}\left(x,y\right)=\frac{{\rm{E}}\left[\left(x-{\mu }_{x}\right)\left(y-{\mu }_{y}\right)\right]}{{\sigma }_{x}{\sigma }_{y}},{x},y{\rm{\epsilon }}{\left\{{H}_{i}\right\}}_{i=1}^{n}\,$$

(21)

where μ and σ denote the mean value and s.d. of corresponding images, respectively, and E represents the arithmetic mean.

Comparison of DPA-TISR with other models

For the comparative analysis between TISR and SISR models in Figs. 2 and 4 and Extended Data Fig. 7, we modified the 3D DPA-TISR into its SISR counterpart by excluding all operations related to alignment and propagation while keeping other network components (that is, the feature extraction module, reconstruction module and residual blocks in the alignment module), identical to (3D) DPA-TISR. As such, the temporal feature aggregation capability of 3D DPA-TISR was dismissed, yielding a pure SISR manner. Considering that the above SISR modification may cause a reduction in trainable parameters (for example, ~7 million for DPA-TISR versus ~5.5 million for SISR), we further compared DPA-TISR to its SISR counterparts with parameter compensation by adding additional residual blocks into SISR models (Supplementary Fig. 18), which verifies that the superiority of DPA-TISR over SISR mainly comes from the use of temporal information, rather than gain of trainable parameters.

In the comparative analysis between DPA-TISR and other TISR models (that is, VRT and BasicVSR++), we used their publicly available implementations on GitHub21,27. All networks were trained with the same dataset and configurations (initial learning rate, learning rate decay, batch size, etc.) for fair comparison. It is noteworthy that the patch size of VRT was adjusted to 64, half that for other models, to ensure similar graphics processing unit (GPU) memory usage.

Implementation details of TISR models

The implementation procedure of TISR model typically includes three steps: training data preparation, model training and model inference. In this work, all TISR models were trained using the BioTISR dataset (Supplementary Table 1) unless otherwise stated. As described above, there are over 50 groups of paired WF–GT sequences for each biological specimen. Typically, we selected 40 of these groups for training and validation and used the remaining ~10 groups for testing. Before training, each group of data was augmented into time-lapse image pairs with the size of 128 × 128 × 7 for WF input and 256 × 256 × 7 or 384 × 384 × 7 for corresponding GT-SIM images by random cropping, horizontal or vertical flipping and random rotation for further enrichment and avoiding overfitting. In particular, we conducted an evaluation on DPA-TISR models trained with different lengths of input sequence and found that the input length of 7 was optimal to balance the computation efficiency and SR performance (Supplementary Fig. 19). In the live-cell experiments, we independently trained a specified DPA-TISR model for each type of biological structures (that is, each color channel) for best SR performance.

The training and inference were performed on a computer workstation equipped with four GeForce RTX 3090 graphic processing cards (NVIDIA) with Python 3.6 and PyTorch 1.12.1. In the model training procedure, the batch size for all experiments was set to 3 and all models were trained using the Adam optimizer with an initial learning rate of \(5\times {10}^{-5}\), which was decayed by 0.5 for every 1,000 epochs. The training process typically took 18 h within approximately 3,000 epochs. Once the networks were trained, TISR models could be applied to process other images of the same specimen, typically taking about 1 s to reconstruct a seven-frame SR stack of 1,024 × 1,024 pixels. The time required for both training and inference decreases linearly with the increase in the number of GPUs used and multi-GPU acceleration has been incorporated into our publicly available Python codes. Detailed instructions about environment installation, inference with pretrained model, training new models, confidence correction fine-tuning, etc. can be found in the GitHub repository of Bayesian DPA-TISR (https://github.com/liushuran2/Bayesian_DPA_TISR).

Calculation of uncertainty

The reliability of DPA-TISR predictions can be quantified through two types of uncertainties: model uncertainty and data uncertainty, also referred to as epistemic uncertainty and aleatoric uncertainty, respectively, in Bayesian analysis16.

Considering the non-Bayesian DPA-TISR, represented by \({f}_{\theta }\), where \(\theta\) indicates the trainable parameters, the output SR image sequence is denoted as \(\hat{{\rm{y}}}={f}_{\theta }(x)\). The network parameters are chosen by minimizing the pixel-wise distance between GT y and \(\hat{{\rm{y}}}\). Taking the L1 loss as an example, the objective function can be expressed as follows:

$${{\mathscr{L}}}_{L1}\left(\,\hat{y},y\right)=\frac{1}{{{T}}}\frac{1}{{{N}}}\mathop{\sum }\limits_{{\rm{t}}=1}^{{{T}}}\mathop{\sum }\limits_{{\rm{n}}=1}^{{{N}}}|\,{y}_{n}^{t}-{\hat{y}}_{n}^{t}|\,$$

(22)

where T denotes the number of time points and N denotes the number of pixels in a single image.

Inspired by previous work11,17, we designed the Bayesian DPA-TISR that predicts both the intensity \({\hat{y}}_{n}\) and the scale \({\hat{\sigma }}_{n}\) for every pixel. Instead of considering each pixel as a single intensity value, we modeled it as a Laplace distribution empirically:

$${p}_{\rm{Laplace}}\left({y;}\hat{y},\hat{\sigma }\right)=\frac{1}{2\hat{\sigma }}\exp \left(-\frac{\left|y-\hat{y}\right|}{\hat{\sigma }}\right)$$

(23)

In this way, the scale \(\hat{\sigma }\) can be regarded as a measurement of the data uncertainty. Then, the output SR image \(\hat{y}\) and the scale \(\hat{\sigma }\) can be simultaneously addressed by minimizing the negative log-likelihood (NLL) function:

$${{\mathscr{L}}}_{\rm{NLL}}\left(\hat{y},y\right)=\frac{1}{{{T}}}\frac{1}{{{N}}}\mathop{\sum }\limits_{t=1}^{{{T}}}\mathop{\sum }\limits_{n=1}^{{{N}}}\frac{\left|{y}_{n}^{t}-{\hat{y}}_{n}^{t}\right|}{{\hat{\sigma }}_{n}^{t}}+\log {\hat{\sigma }}_{n}^{t}\,$$

(24)

To characterize the model uncertainty, we adopted a Bayesian approximation approach17 that uses a distribution over model parameters by incorporating concrete dropout after convolutional layers. Within each inference, the dropout layers in Bayesian DPA-TISR randomly zeroized half of the neurons, thus yielding a distinctive model. Then, by aggregating a certain number (denoted as M) of the outcomes of the stochastic forward propagation, the SR results of Bayesian DPA-TISR can be obtained by averaging the predicted intensity of each trial:

$${\hat{y}}_{\rm{mean}}=\frac{1}{{{M}}}\mathop{\sum }\limits_{m=1}^{{{M}}}{\hat{y}}^{\left(m\right)}$$

(25)

where \({\hat{{{y}}}}^{(m)}\) is the predicted intensity map from the \({m}{\rm{th}}\) network. The model uncertainty is then quantified by calculating the standard deviation of the predicted results:

$${\hat{\sigma }}_{\rm{model}}=\sqrt{\frac{1}{{{M}}}\mathop{\sum }\limits_{m=1}^{{{M}}}{\left({\hat{y}}^{\left(m\right)}-{\hat{y}}_{\rm{mean}}\right)}^{2}}\,$$

(26)

Subsequently, the overall data uncertainty is assessed as follows:

$${\hat{\sigma }}_{\rm{data}}=\sqrt{\frac{1}{{{M}}}\mathop{\sum }\limits_{m=1}^{{{M}}}{\left({\hat{\sigma }}^{\left(m\right)}\right)}^{2}}$$

(27)

where \({\hat{\sigma }}^{(m)}\) is the predicted scale map from the \({m}{\rm{th}}\) network.

Calculation of confidence map

To enhance the integration of model and data uncertainty information and provide biologists with a more intuitive measurement of uncertainty, we take one step further by using a pixel-wise mixture probability distribution to generate an integrated confidence map.

During the inference stage, we independently generated M models \({\theta }^{(m)}\), differing only through dropout layers as depicted above. Considering inferences from M independent models, each pixel \(i\) follows a mixed Laplace distribution (example in Fig. 4a):

$${\hat{f}}^{i}\left({x}_{p}\right)\equiv {p}_{\varOmega }^{i}\left({x}_{p}\right)=\frac{1}{M}\mathop{\sum }\limits_{m=1}^{M}{p}_{\rm{Laplace}}^{i}\left({x}_{p}{{;}}{\hat{{\rm{y}}}}^{\left(m\right)},{\hat{\sigma }}^{\left(m\right)}\right)$$

(28)

where \(\varOmega ={\{{\theta }^{(m)}\}}_{m=1}^{M}\) and \({x}_{p}\) is the coordinate of the PDF.

Accordingly, we defined the credible interval \({A}^{\varepsilon }=[{\hat{y}}_{\rm{mean}}-\varepsilon ,{\hat{y}}_{\rm{mean}}+\varepsilon ]\) and the interval length ε. Then, the corresponding confidence pε,i for pixel \(i\) is defined as the probability that the true value y falls within Aε:

$${p}^{\varepsilon ,i}\equiv {\int }_{{\hat{y}}_{\rm{mean}}-\varepsilon }^{{\hat{y}}_{\rm{mean}}+\varepsilon }{\hat{f}}^{i}\left({x}_{p}\right)d{x}_{p}$$

(29)

After defining a proper value for \({{\varepsilon }}\) (Supplementary Note 7), which was empirically set to 0.04 by default in our experiments, a confidence map can be generated to indicate the probability that the true value falls within the predicted credible interval.

Implementation details of Bayesian DPA-TISR

On the basis of the uncertainty and confidence calculation mentioned above, we designed Bayesian DPA-TISR, which differs from DPA-TISR in three main aspects. First, we integrated a dropout layer after each convolutional layer in the feature extraction module and reconstruction module, randomly deactivating neurons during both training and prediction stages. Notably, we did not apply dropout in the propagation and alignment module as we empirically found that any dropout here resulted in noticeable SR performance decline speculatively because of the temporal information loss during propagation and alignment (Supplementary Fig. 14b), while appropriate dropout (that is, with dropout rate lower than 0.5) in the feature extraction module and reconstruction module slightly benefited the overall SR performance (Supplementary Fig. 14c). Second, we adjusted the output channel number of the last convolutional layer in DPA-TISR from one to two, representing the predicted mean and scale, respectively. An additional sigmoid function was applied to the predicted scale channel to ensure its non-negativity. Third, the objective function was modified from L1 loss to NLL loss as formulated in equation (24).

During inference stage, we independently executed the trained network with dropout six times (that is, M = 6), averaged the results as the final SR output and generated a confidence map as described previously. In particular, in long-term confidence-quantifiable TISR imaging experiments, we observed that the predicted scales tended to increase with the corresponding inferred intensities, making it unintuitive to discern which area of an output image is reliable or unreliable solely from the confidence map. To address this issue, we adopted an intensity-aware confidence generation scheme. Instead of using a constant \(\varepsilon\) of the credible interval \({A}^{\varepsilon }=[\,\hat{{{y}}}-\varepsilon ,\hat{{{y}}}+\varepsilon ]\) (hereafter, \({\hat{y}}_{\rm{mean}}\) is denoted by \(\hat{y}\) for simplicity), we modified the interval length \(\varepsilon\) as follows:

$$\bar{\varepsilon }={\rm{Maximum}}\left({\rm{\gamma }}\times {\rm{Abs}}\left(\,\hat{{{y}}}\right),\varepsilon \right)$$

(30)

where the scalar scaling factor γ and ε were empirically set to 0.2 and 0.04, respectively, in our experiments. Using \(\bar{\varepsilon }\) essentially defines a threshold of 0.2 for foreground segmentation. For pixels with values greater than 0.2, which are more likely to belong to the actual structure, we assigned a proportion (0.2) of their absolute intensity as the interval length. Conversely, for pixels with values less than 0.2, typically representing the background region, we assigned a constant value of 0.04 as the interval length, thereby rationalizing the confidence calculation within background regions of the inferred TISR image.

Calculation of ECE and reliability diagram

To evaluate the effectiveness of the uncertainty and confidence map, the reliability diagram that compares the empirical accuracy to averaged confidence was computed following a standard procedure11 in our experiments. Well-calibrated confidence in the reliability diagram should yield confidence values similar to accuracy, resulting in a diagonal diagram.

Specifically, the empirical accuracy is defined as the proportionality that the GT \(y\) falls into the credible interval \({A}^{\varepsilon }=[\,\hat{{{y}}}-\varepsilon ,\hat{{{y}}}+\varepsilon ]\). Consequently, the accuracy and confidence are specified as follows:

$${\rm{Accuracy}}\left(\,{\hat{y}},{y\; |}{{S}},{{\varepsilon }}\right)=\frac{1}{\left|{{S}}\right|}\sum _{i{{\epsilon }}{{S}}}1\left[\,{y}_{{i}}{{\epsilon }}{A}^{\varepsilon }\right]=\frac{1}{\left|{{S}}\right|}\sum _{i{{\epsilon }}{{S}}}1\left\{{y}_{{i}}{{\epsilon }}\left[{{{\hat{y}}}}_{i}-\varepsilon ,{{{\hat{y}}}}_{i}+\varepsilon \right]\right\}$$

(31)

$${\rm{Confidence}}\left({\hat{y}},{\hat{f}}\,{|}{{S}},{{\varepsilon }}\right)=\frac{1}{\left|{{S}}\right|}\sum _{{{i}}{{\epsilon }}{{S}}}{p}^{\varepsilon }=\frac{1}{\left|{{S}}\right|}\sum _{{{i}}{{\epsilon }}{{S}}}{\int }_{{{{\hat{y}}}}_{i}-\varepsilon }^{{{{\hat{y}}}}_{i}+\varepsilon }{\hat{f}}^{\,i}\left(x\right){{\rm{d}}x}$$

(32)

where \({{S}}\) denotes a subset of all pixels, 1(∙) denotes the indicator function and ε > 0 determines the length of the credible interval around each \(\hat{{{y}}}\).

To construct a reliability diagram with K groups, we divided the value of confidence into K intervals segmented by τ0, τ1, …, τK. For pixels in \({S}_{k}^{\varepsilon }=\{{p}^{\varepsilon }\epsilon ({\tau }_{k-1},{\tau }_{k}]\}\), we plotted \({\rm{Confidence}}(\,{{\hat{y}},{\hat{f}}\,{|}S}_{k}^{\varepsilon },{{\varepsilon }})\) against \({\rm{Accuracy}}(\,{{\hat{y}},{y\; |}S}_{k}^{\varepsilon },{{\varepsilon }})\) to obtain the final reliability diagram. Furthermore, the ECE was determined as the weighted average of the absolute differences between the accuracy and confidence:

$${\rm{ECE}}\left({\hat{y}},{\hat{f}},{y|}{{\varepsilon }}\right)=\mathop{\sum }\limits_{{{k}}=1}^{{{K}}}\frac{\left|{S}_{k}^{\varepsilon }\right|}{{{N}}}\left|{\rm{Confidence}}\left({\hat{y}},{\hat{f}}\,{|}{S}_{k}^{\varepsilon },{{\varepsilon }}\right)-{\rm{Accuracy}}\left({{\hat{y}},{y\; |\; S}}_{k}^{\varepsilon },{{\varepsilon }}\right)\right|$$

(33)

Confidence correction for Bayesian DPA-TISR

Recognizing the disparity between the estimated confidence and actual accuracy (that is, overconfidence for most Bayesian neural networks), we developed an iterative fine-tuning framework to eliminate the ECE between the estimated confidence and accuracy. During the fine-tuning stage, the objective function was modified from equation (24) as follows:

$${{\mathscr{L}}}_{{\rm{fine}}-{\rm{tuning}}}\left(\,\hat{y},y\right)={{\mathscr{L}}}_{\rm{NLL}}\left(\,\hat{y},y\right)+\alpha \times {R}_{\rm{confidence}}\left(\hat{y},\hat{f},y\right)$$

(34)

where \({R}_{\rm{confidence}}\) is the CCR and \(\alpha\) is a weighting scalar to balance \({{\mathscr{L}}}_{{NLL}}\) and \({R}_{\rm{confidence}}\), which was empirically set to 0.1 in our experiments. CCR comprises two parts:

$${R}_{\rm{confidence}}\left({\hat{y}},{\hat{f}},y\right)={\rm{ECE}}\left({\hat{y}},{\hat{f}},y{{|}}{{\varepsilon }}\right)+k\times {\rm{confidence}}\left({\hat{y}},{\hat{f}}\,{{|}}{{\varepsilon }}\right)$$

(35)

where the second term, \({\rm{confidence}}\left({\hat{y}},{\hat{f}}\,|{{\varepsilon }}\right)\), is the average confidence of the outputs and the corresponding weighting scalar \(k\) aims at adjusting overall average confidence of prediction. Positive values of k suppress overconfidence and negative values of k restrain underconfidence, which is the key variable in our optimization framework. We adopted a combined strategy of linear searching and parabola fitting to determine the optimal value of k.

In the optimization process, a relative ECE (rECE) of the original trained network, denoted as E0, is first calculated by

$${\rm{rECE}}\left({\hat{y}},{\hat{f}},y{{|}}{{\varepsilon }}\right)=\mathop{\sum }\limits_{{{k}}=1}^{{{K}}}\frac{\left|{S}_{k}^{\varepsilon }\right|}{{{N}}}\left({\rm{Confidence}}\left({{\hat{y}},{\hat{f}}\,{{|}}S}_{k}^{\varepsilon },{{\varepsilon }}\right)-{\rm{Accuracy}}\left({\hat{y}},y{{|}}{S}_{k}^{\varepsilon },{{\varepsilon }}\right)\right)$$

(36)

of which the sign is used to determine the initial direction for subsequent optimization:

$${k}_{1}=0.5\times {\rm{Sgn}}\left({E}_{0}\right)$$

(37)

$${k}_{2}={k}_{1}-{\Delta} \times {\rm{Sgn}}\left({E}_{0}\right)$$

(38)

$${\rm{Sgn}}\left(x\right)=\left\{\begin{array}{c}1,\,x\ge 0\\ -1,\,x < 0\end{array}\right.$$

(39)

where \({\Delta}\) is the step size set to 0.1 by default. Next, the first two trials of fine-tuning are independently performed with \({k}_{1}\) and \({k}_{2}\), respectively, using the objective function described in equation (34), after which we obtain two new ECE values of the fine-tuned networks, denoted as \({E}_{1}\) and \({E}_{2}\). If \({E}_{1} < {E}_{2}\), we exchange \({k}_{1}\) and \({k}_{2}\), as well as the corresponding \({E}_{1}\) and \({E}_{2}\), and reverse the searching direction (that is, reset \({\Delta}\) as −0.1) to ensure that \({k}_{2}\) is the ECE-descent direction compared to \({k}_{1}\). Afterward, the linear searching process continues along this descent direction,

$${k}_{i}={k}_{i-1}-{\Delta} \times {\rm{Sgn}}\left({E}_{0}\right),{i}\ge 3$$

(40)

until finding a \({k}_{i}\) value that satisfies \({E}_{i} > {E}_{i-1}\), where \({E}_{i}\) denotes the ECE value of the fine-tuned model with \({k}_{i}\). We then use the quadratic polynomial fitting to find the optimal \({k}_{* }\) value according to the three latest weighting scalars \({k}_{i},\,{k}_{i-1},\,{k}_{i-2}\) and corresponding ECE values \({E}_{i},{{E}}_{i-1},\,{E}_{i-2}\):

$$\bar{{k}_{1}}=\frac{1}{2}\left({k}_{i}+{k}_{i-1}\right),\,\bar{{k}_{2}}=\frac{1}{2}\left({k}_{i-1}+{k}_{i-2}\right)$$

(41)

$${\beta }_{1}=(E_{i}-{E}_{i-1})/(k_{i}-{k}_{i-1}),\,{\beta }_{2}=\frac{({E}_{i-1}-{E}_{i-2})}{(k_{i-1}-{k}_{i-2})}$$

(42)

$$\dot{\beta }=\left({\beta }_{1}-{\beta }_{2}\right)/\left(\bar{{k}_{1}}-\bar{{k}_{2}}\right)$$

(43)

$${k}_{* }=\bar{{k}_{2}}-{\beta }_{2}/\dot{\beta }$$

(44)

One final fine-tuning is carried out using the optimal \({k}_{* }\) in the objective function to obtain a confidence-calibrated Bayesian DPA-TISR model. During the fine-tuning stage, the model was trained using the Adam optimizer with an initial learning rate of \(5\times {10}^{-5}\), which is decayed following a cosine annealing strategy over a course of 30 epochs. The overall fine-tuning process typically takes less than 1 h. The workflow diagram of the confidence correction is shown in Supplementary Fig. 20.

Mito–PO contact quantification

The contact level between Mito and POs was evaluated by confidence-weighted MOC. Considering that Mito–PO contact sites were principally situated at the periphery of POs, we first identified the boundary of each PO using the following procedure: (1) the background of the ROI of the PO was estimated by applying a Gaussian filter with s.d. = 10 pixels; (2) the TISR image was smoothed using another Gaussian filter with s.d. = 1 pixel and then the estimated background was subtracted; (3) a binary mask was generated by setting a threshold to the background-subtracted image; (4) the boundary of each PO was extracted using the Sobel operator; and (5) the PO boundary image was convolved with the equivalent PSF of SIM, thereby delineating potential contact regions of POs. Next, following steps 1–3, a binary Mito mask, denoted as \({{{M}}}_{{\rm{Mito}}}\), was calculated.

We reasoned that the regions with higher confidence should have higher weights in MOC calculation. Therefore, we applied the confidence map estimated by the Bayesian DPA-TISR model as adaptive wights to rationalize the quantification of Mito–PO contacts as follows:

$${\rm{MOC}}=\frac{{\sum }_{{{i}}}{{{Y}}}_{{{i}}}\bullet {{{M}}}_{{\rm{Mito}},{{i}}}\bullet {{{C}}}_{{\rm{Mito}},{{i}}}}{{\sum }_{{{i}}}{{{Y}}}_{{{i}}}}$$

(45)

where \({{{Y}}}_{{{i}}}\), \({{{M}}}_{{\rm{Mito}},{{i}}}\) and \({{{C}}}_{{\rm{Mito}},{{i}}}\) denote the value of the \({i}{\rm{th}}\) pixel in the ROIs of the PO boundary image \({{Y}}\), Mito mask \({{{M}}}_{{\rm{Mito}}}\) and the confidence map \({{{C}}}_{{\rm{Mito}}}\).

Cell culture, transfection and staining

COS-7 and HeLa cells, as well as their stable cell lines, were cultured in DMEM (Gibco, 11965092), supplemented with 10% FBS (Gibco, 10099141C) and 1× penicillin–streptomycin (Thermo Fisher Scientific, 15140122) at 37 °C in a Thermo Fisher Scientific Heracell 150i CO2 incubator. SUM159 cells were cultured in DMEM/F12K medium supplementary with 5% FBS and 1% penicillin–streptomycin solution.

For live-cell imaging, the 35-mm coverslips were precoated with 50 μg ml−1 collagen and 1 × 105 cells were seeded onto coverslips. For transient transfection, cells were transfected with plasmids using Lipofectamine 3000 (Invitrogen, L3000150) according to the manufacturer’s protocol 12 h after plating. Cells were imaged for 12 h after transfection. Where indicated, the cells transfected with Halo-tagged plasmids were labeled with 10 nM JF549 ligand for 15 min according to the published protocol49. The cells were rinsed with fresh medium to remove unbound ligand and imaged immediately afterward. The plasmids used in transient transfection included Lifeact–mEmerald, Lifeact–SkylanNS, Clathrin–mEmerald, Clathrin–mCherry, 3×mEmerald–Ensconsin, Lamp1–Halo, 2×mEmerald–Tomm20, TFAM–mEmerald, PKMO–Halo and PMP–Halo.

Statistics and reproducibility

Figures 1h–j and 2e,f and Extended Data Figs. 3c,d, 5f,h, 6c,d and 7e were plotted in Tukey box-and-whisker format. The box extends from the 25th and 75th percentiles and the line in the middle of the box indicates the median. The upper whisker represents the larger value between the largest data point and the 75th percentile plus 1.5× the interquartile range (IQR) and the lower whisker represents the smaller value between the smallest data point and the 25th percentile minus 1.5× the IQR. Data points larger than the upper whisker or smaller than the lower whisker were identified as outliers and are displayed as black spots.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.